Telnyx

HD STT (Speech-to-text): What to test first

What HD STT is, how to test it, and where it shines in real-time Voice AI Agents. Quick tips, eval checklists, and examples.

HD STT (Speech-to-text): What to test first

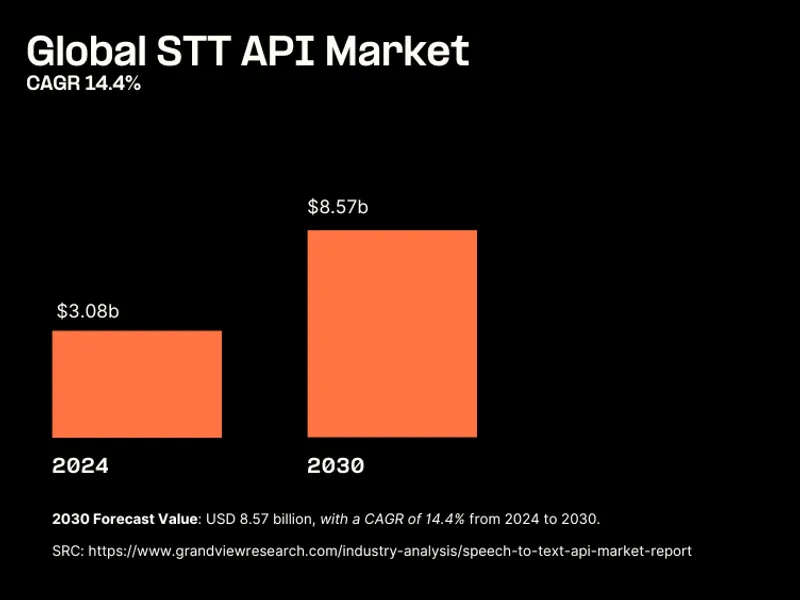

Voice AI agents are reshaping customer interactions. What separates successful deployments from the rest is choosing the right speech-to-text foundation. The global speech-to-text API market is projected to reach $8.57 billion by 2030, growing at 14.4% annually, yet many teams still struggle with fragmented vendor setups, unpredictable latency, and accuracy issues in production.

If you're evaluating STT for real-time voice AI agents, you need concrete benchmarks for accuracy, latency, and codec performance. This guide walks you through exactly what to test, how to measure results, and where HD codecs make the biggest impact.

Why HD codecs matter for transcription accuracy

Standard telephony uses narrowband audio (8 kHz sampling, ~300–3400 Hz). HD Voice using G.722 or Opus in wideband samples at 16 kHz and extends usable bandwidth to ~7 kHz, which makes high-frequency consonants clearer (for example, ‘fifteen’ vs ‘fifty’).”

Consider this: 73% of organizations cite accuracy as the primary obstacle to voice tech adoption, while 66% struggle with accent and dialect recognition. HD codecs directly address these challenges by capturing the full frequency spectrum of human speech, In practical terms, HD audio delivers:

- Clearer distinction between similar-sounding words and numbers

- Better performance in noisy environments

- More accurate transcription of accented speech

- Reduced need for caller repetition

Your STT evaluation checklist

Test accuracy across real-world scenarios

Start with these baseline tests to measure transcription accuracy:

Numbers and alphanumerics

- Phone numbers: "(555) 867-5309"

- Account numbers: "AC-4829-JX-7B"

- Credit card digits: Read 16-digit sequences

- Confirmation codes: "Charlie-Seven-Bravo-Nine"

Similar-sounding words

- Medical terms: "hypertension" vs. "hypotension"

- Financial terms: "debit" vs. "credit"

- Common confusions: "can" vs. "can't" (especially critical for consent)

Background noise conditions

- Coffee shop ambient noise (65-70 dB)

- Call center environment (multiple voices)

- Outdoor/street noise (variable 70-80 dB)

- Poor cellular connection simulation

Track word error rate (WER) for each scenario. I. Your production STT should target sub-5% WER for critical use cases like payment processing or medical terminology.

Measure latency at every step

Round-trip latency determines whether your voice AI feels conversational or robotic. Break down your measurements:

Audio capture to transcription

- Target: Under 300ms for initial transcription

- Measure: First partial result timing

- Critical path: Network hop from PSTN to STT processor

End-to-end conversation flow

- User finishes speaking

- STT processes final utterance (100-200ms)

- LLM generates response (500-1500ms)

- TTS synthesizes speech (100-200ms)

- Audio streams to caller (50-100ms)

Total target: Under 2 seconds for complete turn-taking. When STT, TTS, and telephony infrastructure share the same network backbone, you eliminate 100-300ms of inter-vendor latency.

Compare codec performance

Run identical test scripts through different codec configurations:

| Codec | Sampling rate | Bitrate | Frequency range | Best for |

|---|---|---|---|---|

| G.711 (narrowband) | 8 kHz | 64 kbps | 300–3400 Hz | Legacy systems, basic telephony |

| G.722 (HD voice) | 16 kHz | 64 kbps | 50–7000 Hz | Business VoIP, clear conversations |

| Opus (modern HD) | 8–48 kHz (variable) | 6–510 kbps (adaptive) | Full range with dynamic optimization | Voice AI, music, maximum flexibility |

Document WER differences, particularly for:

- Speaker identification accuracy

- Emotion/sentiment detection

- Technical terminology

- Non-native speaker comprehension

Sample prompts for production testing

Healthcare appointment scheduling

"I need to schedule a follow-up for my hypertension medication.

My doctor is James Martinez—that's M-A-R-T-I-N-E-Z.

I'm available Tuesday after 2:30 p.m. or Thursday morning before 11."

Test for: Medical term accuracy, name spelling, time parsing

Financial services verification

"My account number is 4-8-2-9-6-7-3-5-1.

I'd like to transfer fifteen hundred dollars, not fifty hundred.

The routing number is 0-2-1-0-0-0-0-2-1."

Test for: Numeric precision, amount disambiguation, security compliance

Technical support troubleshooting

"The error code is E-X-4-0-9-dash-B-R-K-T.

I've already tried restarting the router and clearing the DNS cache.

My firmware version is 12.3.45 build 2879."

Test for: Alphanumeric accuracy, technical terminology, version number parsing

Multi-accent retail ordering

[Test with various accents]

"I'd like three large lattes with oat milk,

two chocolate croissants. Actually, make that three,

and one gluten-free blueberry muffin."

Test for: Accent handling, quantity changes, dietary terminology

Where HD STT delivers maximum impact

Contact center operations

When paired with HD codecs, speech-to-text (STT) delivers real impact: shorter handle times, better first-call resolution, and fewer back-and-forths.When agents and AI assistants hear customers clearly, they resolve issues without repetition.

Real-world impact:

- Fewer repetition requests when HD audio captures the full frequency spectrum

- Higher customer satisfaction scores from natural conversation flow

- Better compliance recording quality for regulated industries

Voice AI agents at scale

By 2025, AI is expected to handle 95% of customer interactions. That future needs high-fidelity audio to work. HD STT delivers the clarity needed for natural conversation at scale.

- Consistent accuracy across diverse caller populations

- Reliable performance in variable network conditions

- Seamless handoff between AI and human agents

Why fragmented infrastructure fails at scale

Most teams cobble together STT from one vendor, telephony from another, and TTS from a third. This fragmented approach creates three critical problems:

Latency compounds across vendors: Every network hop between services adds extra latency. When your audio travels from a telecom provider to a cloud STT service, then to your application, then to a TTS provider, you're adding 200-400ms before you even start processing. That's the difference between a natural conversation and an awkward pause.

Costs spiral unpredictably: Multiple vendors mean multiple markups, extra fees, and per-minute charges that vary by region. You're paying for the same audio to traverse multiple networks, with each vendor taking their cut.

Compliance becomes a nightmare: Try explaining to auditors why PHI flows through three different companies' infrastructure, each with their own data residency policies and security certifications. One weak link compromises your entire compliance posture.

The Telnyx advantage: Full-stack voice infrastructure

Telnyx built what others can't: native telecom infrastructure with AI processing on the same network. Here's why that matters:

Colocated GPUs at telecom PoPs While competitors route your audio to generic cloud regions, Telnyx runs STT/TTS processing directly at telecom points of presence. Your audio never leaves our private IP network, cutting latency to sub-200ms for a round-trip time consistently.

True single-vendor simplicity

- Native PSTN connectivity (we're a Tier-1 carrier, not a reseller)

- Free of charge HD Codecs

- Programmable call control with native STT and TTS options One bill, one BAA/DPA, one support team

Regional compliance built in Deploy in the regions you need with automatic data residency. HIPAA, GDPR, and other certifications cover the entire stack, not just pieces of it.

Start testing with production-ready infrastructure

HD STT performance depends on your entire voice stack working together. Begin with these steps:

For Voice API and TeXML:

- Sign up or log in to your Telnyx Mission Control Portal account.

- Buy a number with HD codecs

- Configure your Voice API or TeXML

- Use the STT command and choose your STT engine

- Make a call to confirm real-time transcription is working as expected

For AI Agents:

- Sign in to your Telnyx Mission Control Portal

- Navigate to the AI, Storage and Compute → AI Assistants

- Create a new assistant

- Test STT directly in the builder

When you're ready to test with infrastructure purpose-built for voice AI, Telnyx offers a fundamentally different approach. As a Tier-1 carrier with colocated AI processing, we deliver what fragmented setups can't: predictable low latency latency, native and free HD codec support from ingress to processing, and compliance that covers your entire voice stack.

Ready to test HD STT on infrastructure that actually delivers? Connect with Telnyx to run your evaluation scripts on our integrated voice platform and see the difference full-stack control makes.

Testing HD speech to text? Join our subreddit for tips.

Share on Social

Jump to:

Why HD codecs matter for transcription accuracyYour STT evaluation checklistSample prompts for production testingWhere HD STT delivers maximum impactWhy fragmented infrastructure fails at scaleThe Telnyx advantage: Full-stack voice infrastructureStart testing with production-ready infrastructureSign up for emails of our latest articles and news

Related articles