Last updated 27 Nov 2023

Building a low-latency conversational AI chatbot

Contact our team to give your next AI project a boost with a Telnyx-powered chatbot. Or sign up for a free Telnyx account to test the power of Telnyx Inference for yourself.

With the ability to help businesses enhance customer experiences and streamline operations, organizations of all sizes are looking to leverage the capabilities of conversational artificial intelligence (AI).

When implemented effectively, conversational AI is a clear asset. It can turn routine customer service interactions into highly engaging experiences while providing personalized, efficient support.

But when it misses the mark, conversational AI can cause frustration for customers. Slow and impersonal responses, misunderstood commands, or poor call quality can put additional strain on customer service teams—the exact thing conversational AI is designed to help you avoid.

With Telnyx Inference companies can build world-class conversational AI chatbots to help generate business growth and enhance customer satisfaction.

Telnyx and voice AI

As a CPaaS, we’re continuing to develop internal tooling that combines our communications expertise with the latest and greatest advancements in the AI space.

Enzo Piacenza, a software engineer at Telnyx, recently showcased the first iteration of a conversational AI bot built on Telnyx’s Voice API.

Using next-gen features like our in-house speech-to-text (STT) engine, HD voice, and noise suppression, Enzo was able to produce an accurate conversational AI tool.

But using top-notch tools is only one piece of the puzzle when crafting high-quality conversational AI chatbots. The next step was to reduce the latency of the bot between conversation turns for quick, human-like responses—because no one likes long awkward pauses.

Let’s hear from Enzo how he improved latency and responses using Telnyx APIs.

Building towards real-time communication with a conversational AI chatbot

In the previous iteration of the AI voice bot there was a clear delay between human speech and the bot’s spoken answer. While the information is accurate, the delay makes the interaction feel unnatural and could frustrate customers. This frustation could lead to reduced engagement and satisfaction.

Since the goal is to get closer to real-time communication, I set out to reduce the conversational AI bot’s response latency.

I made two major changes from the V1 bot to help me achieve the desired low-latency responses from the AI bot:

- I used the streaming feature of the Telnyx Voice API to send chunks of the LLM’s response to the human.

- I started to use the new Telnyx Inference product with quantitized Llama 2 model for my LLM which provides an increased number of tokens per second which allows me to get a faster LLM response.

Streaming before speaking

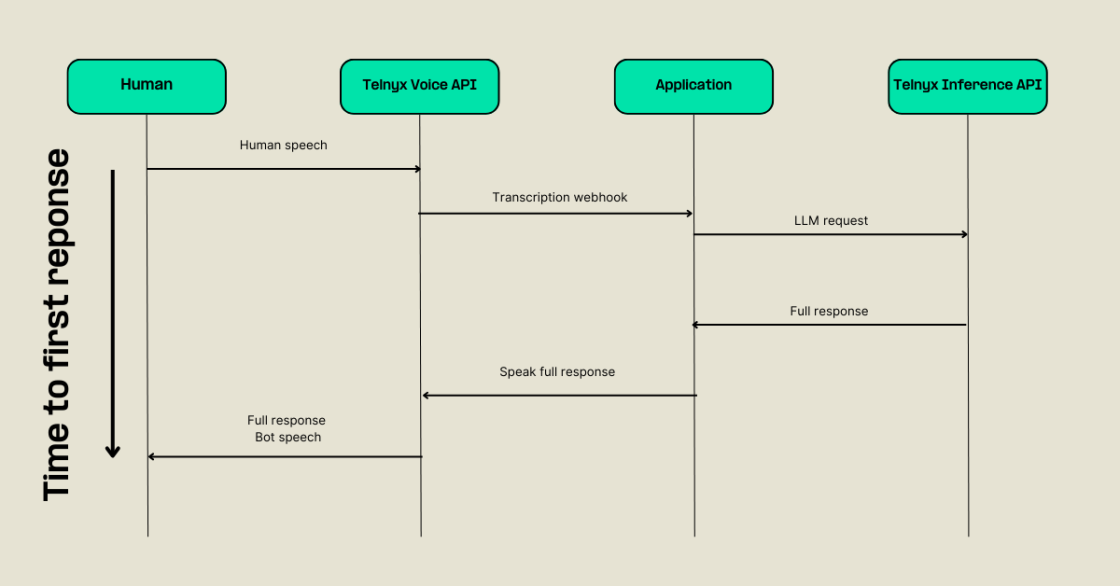

In the first iteration of the Voice AI bot, I used a very simple system:

In this system, the application had to wait to receive the transcription webhook of the entire question from the Telnyx Voice API to start generating a full response. This process included a pause to ensure that question had been asked in its entirety, which added extra latency to the bot.

Clearly, this system didn’t optimize for the low-latency interaction required for an engaging—and ultimately successful—conversational AI chatbot.

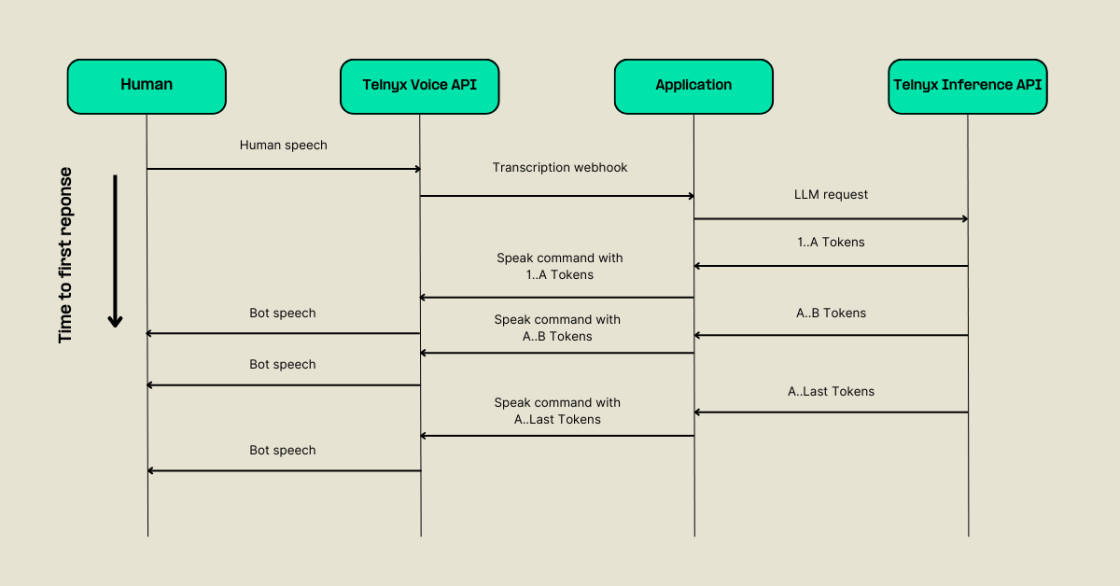

In the second iteration, I reduced the delay by implementing the following method:

Here’s how it works:

- The process starts with human speech, prompting a Telnyx transcription webhook to be received by the app.

- The app makes a request to Telnyx Inference to stream the response—without waiting for the full answer to be returned. This important change ensures that a response is relayed back to the customer as soon as it’s received.

- I keep sending speak commands with more sentences as they come.

Adding streaming capabilities means the bot can get started on a response without the full sentence, reducing time to response.

Using Telnyx Inference to enhance my conversational AI bot

Telnyx Inference provides a number of LLMs to use in my app. In this case, I chose to work with quantized Llama2 , as it can provide 80 tokens per second. The increased number of tokens reduces the latency of the chatbot response for a human-like interaction.

Build your own voice AI bot with Telnyx

The future of customer engagement is rooted in the seamless integration of advanced AI with robust communication technologies. The era of waiting for delayed responses or dealing with inefficient chatbots is fading away thanks to the innovative strides made in this field.

You can achieve real-time, efficient, intelligent communication by using AI's rapid processing capabilities and Telnyx's powerful, low-latency communication infrastructure. As demonstrated by Enzo’s low-latency demo—built exclusively with Telnyx tools—this combination ensures your chatbot is smart, incredibly swift, and reliable.

Moreover, the scalability and flexibility offered by Telnyx mean that your conversational AI chatbot can grow and evolve no matter the size of your business or the complexity of your requirements. This adaptability ensures that your chatbot remains a step ahead as your business expands and customer needs change, consistently delivering top-notch service.

Sign up for emails of our latest articles and news

Related articles