Conversational AI

Crafting conversational AI: Speech-to-text (STT)

Speech-to-text (STT) technology is the engine that makes conversational AI chatbots run.

This blog post is Part Three of a four-part series where we’ll discuss conversational AI and the developments making waves in the space. Check out Parts One (on HD voice codecs) and Two (on noise suppression) to learn more about the features that can help you create high-performance conversational AI tools.

Imagine a world where machines understand and respond to human speech as naturally as another person would. This isn't a distant dream but a reality that's unfolding right now, thanks to the advancements in speech-to-text (STT) and conversational AI technologies.

As a developer, you're at the forefront of this revolution, building chatbots that are becoming increasingly sophisticated. But the landscape is changing rapidly. New tools, techniques, and emerging trends can help you take your chatbots to the next level.

What if you could build chatbots that understand multiple languages, accents, and dialects? What if your chatbots could maintain the context of a conversation, handle multiple topics simultaneously, and even exhibit empathy? With the latest developments in STT and conversational AI, these are realities waiting to be explored.

In this blog post, we'll dive into the intricacies of STT and conversational AI, explore the latest trends, and provide insights into how you can leverage these technologies to enhance your chatbots.

What is speech-to-text (STT), and how does it intersect with conversational AI?

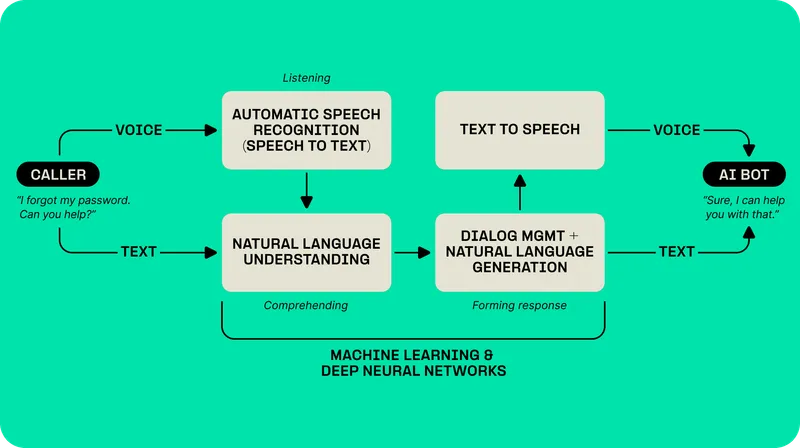

Speech-to-text technology is a critical component of conversational AI systems. It serves as the initial step in the process of understanding and responding to spoken language.

In the context of conversational AI, STT works by converting spoken language into written text. This conversion is crucial because it allows the AI to process and understand the user's spoken input.

Once the spoken words are converted into text, other components of the conversational AI system, such as natural language processing (NLP) and natural language understanding (NLU), can analyze the text to understand its meaning, context, and intent.

After the AI system understands the user's intent, it can generate an appropriate response. This response is typically in text form, which can then be converted back into speech using text-to-speech (TTS) technology, allowing the AI to respond verbally to the user.

Top benefits of using STT to build conversational AI tools

STT enhances user experience, promotes inclusivity, and drives efficiency, making it an indispensable asset in every conversational AI developer’s toolkit. Below, we’ll explore these benefits in detail and discuss the transformative potential of STT in crafting sophisticated conversational AI tools.

It can help you create an enhanced user experience

STT allows users to interact with AI tools using their natural speech, making the interaction more intuitive and user-friendly. This intuitiveness is particularly beneficial for users who struggle with typing or prefer speaking over writing.

It makes your tools more accessible

STT technology makes conversational AI tools more accessible to people with disabilities. For instance, individuals with visual impairments or motor challenges can use their voices to interact with these tools, making them more inclusive.

Conversations at real-time paces improve efficiency

Speaking is often faster than typing, especially for lengthy or complex instructions. STT technology can process spoken commands quickly, improving the efficiency of user interactions.

STT technology can also transcribe speech in real time, enabling immediate responses from the AI system. Real-time responses help you leverage the efficiency of automation and improve the user experience by providing responses at a more “natural” pace.

Multilingual support helps you reach a larger audience

Modern STT systems can understand and transcribe multiple languages, dialects, and accents. Understanding multiple languages allows you to use your conversational AI tools to cater to a global audience.

Contextual understanding gives users the answers they need

Advanced STT systems use machine learning (ML) and AI to understand the context of the conversation, improving the accuracy of transcriptions and the overall effectiveness of the conversational AI tool.

By integrating STT technology, developers can build more effective, efficient, and accessible conversational AI tools that offer a more natural, engaging user experience.

Advancements in STT technology and conversational AI

STT technology has come a long way since its inception. Modern STT systems leverage deep learning algorithms to achieve high accuracy rates, even in noisy environments. They can understand multiple languages, accents, and dialects, making them more versatile and user-friendly.

One of the latest trends in STT technology is the use of transformer-based models like BERT (Bidirectional Encoder Representations from Transformers). These models can understand the context of a conversation, leading to more accurate transcriptions.

Conversational AI has also seen significant advancements. Traditional rule-based chatbots have given way to AI-powered bots that can understand and respond to complex queries. They can maintain the context of a conversation, handle multiple topics simultaneously, and even exhibit empathy.

The advent of tools like OpenAI’s GPT-4 and Whisper has taken conversational AI to new heights. These language models and neural networks can generate human-like text and responses, making interactions with chatbots more engaging and natural.

Leveraging STT and conversational AI for chatbots

As developers, integrating STT and conversational AI into your chatbots can significantly enhance their functionality. Here are some ways you can take your conversational AI tools to the next level:

1. Use pre-trained models

Training models from scratch requires a significant amount of time, computational resources, and a large, diverse dataset. Pre-trained models have already been trained on extensive datasets, saving developers from the time-consuming and resource-intensive training process.

These datasets are often vast and diverse, enabling them to handle a wide range of tasks and scenarios. They can understand multiple languages, accents, and dialects, making them more versatile and user-friendly.

Especially if models have been trained on deep learning, they should be able to handle complex tasks, such as understanding the context of a conversation, which is crucial for effective conversational AI.

2. Customize your models

The diversity of pre-trained models makes them extremely versatile, but it doesn’t always mean they’ll be suited to your specific needs. Suppose you need to design your chatbot for a specific use case or domain (like healthcare, finance, or customer support). In that case, customizing these models allows them to better understand and respond to domain-specific language, jargon, or user intents.

Outside of domain-specific training, your user base might have unique characteristics, such as a specific accent, dialect, or language that’s not well-represented in the training data of the pre-trained model. By fine-tuning the model on your own data, you can improve its performance for your specific user base.

Finally, customization allows the model to better understand the context and nuances of the conversations it will handle. A better understanding of context leads to more accurate transcriptions in the case of STT and more appropriate responses in the case of conversational AI, thereby improving the overall performance of the chatbot.

3. Expect to handle multiple languages and accents

Especially if you plan to cater to a global audience, multilingual support allows your chatbots to serve users from different regions and linguistic backgrounds, thereby expanding their reach and usability.

Since users prefer interacting with AI tools in their native languages, multilingual chatbots can provide a better user experience, leading to higher user engagement and satisfaction. Language nuances, slang, and colloquialisms can vary greatly between languages. Multilingual support ensures the chatbot can accurately understand and respond to these variations, leading to more accurate and effective interactions.

By supporting multiple languages, chatbots also become more accessible and inclusive, giving them a competitive edge over monolingual bots. Multilingual support can be a key differentiator that sets a chatbot apart from others that only support one or a few languages.

4. Maintain context

Context plays a vital role in understanding the meaning of words and phrases. The same word can have different meanings in different contexts. By maintaining context, chatbots can accurately understand user inputs and provide appropriate responses.

Furthermore, in human conversations, we often refer back to previous statements or rely on the overall context of the conversation. For a chatbot to mimic this natural flow, it needs to maintain and understand the context of the conversation.

A chatbot that maintains context can handle complex conversations, switch between topics seamlessly, and remember past interactions. This ability leads to a more engaging and satisfying user experience.

5. Test and iterate

Testing allows you to evaluate the performance of your chatbot in real-world scenarios. It helps identify areas where the chatbot excels and falls short, providing valuable insights for improvement.

Through testing, you can identify and fix bugs, errors, or unexpected behavior in the chatbot, ensuring it functions as intended and provides a smooth user experience. Testing also provides an opportunity to gather user feedback, which provides a user's perspective on the chatbot's performance, usability, and functionality. This feedback loop is essential for maintaining performance with chatbot testing, as it ensures the system adapts to real usage patterns rather than just scripted scenarios. Developers can assess response latency, accuracy of intent recognition, and how gracefully the chatbot recovers from misunderstood queries. Testing different conversation branches also highlights whether the chatbot can sustain context over longer interactions. By refining these aspects iteratively, teams create a chatbot that improves with every cycle instead of stagnating after launch.

And the testing doesn’t stop once you’ve released your chatbot into the wild. Language and the way people use it are constantly evolving. Regular testing and iteration allow the chatbot to adapt to these changes and stay relevant.

Following these best practices will involve some effort on the part of your development team. But the good news is, you can adhere to many of them by partnering with a platform that incorporates them into their STT engines.

Choose a next-gen platform to build next-level conversational AI

STT and conversational AI are transforming the way we interact with machines. As developers, staying abreast of the latest trends and advancements in these fields can help you build more effective and engaging chatbots. By leveraging these technologies, you can create chatbots that understand and respond to voice commands and provide a more natural and intuitive user experience.

But end-users aren’t the only ones who should have access to an intuitive UX. The platforms we use to create conversational AI tools should also be easy to use, offer natural workflows, and leverage the latest advancements in conversational AI.

Telnyx’s Voice API offers an intuitive, advanced platform for developers interested in building and managing next-level voice-activated chatbots. Our STT engine leverages next-gen technology like OpenAI Whisper, which has been trained on 680,000 hours of multilingual and multi-task supervised data and can support nearly 60 languages. Paired with high-quality voice calls running on our global private network, using the Telnyx platform ensures your conversational AI tools can leverage high-quality inputs for equally high-quality interactions. Want to see Telnyx STT in action? Watch our demo to see how to start transcribing calls in real-time in just a few minutes.

Contact our team of experts to learn how you can leverage Telnyx’s Voice API to build next-level conversational AI solutions.

Share on Social

Jump to:

What is speech-to-text (STT), and how does it intersect with conversational AI?Top benefits of using STT to build conversational AI toolsAdvancements in STT technology and conversational AILeveraging STT and conversational AI for chatbotsChoose a next-gen platform to build next-level conversational AISign up for emails of our latest articles and news

Related articles