Conversational AI

How to build a Voice AI product that does not fall apart on real calls

Explore why most voice AI fails in production, what a real system must do end-to-end, and how to measure success using job-level metrics and reliable escalation.

Voice AI is no longer blocked on model quality.

Speech recognition is good enough to deploy. Text-to-speech is natural enough for real conversations. Large language models can reason, follow instructions, and call tools.

Yet most voice AI systems fail in production in very similar ways:

- They feel slow in live conversation

- They break when callers interrupt or change direction

- They lose context across turns or handoffs

- They cannot safely take real actions in core systems

These are not model failures. They are product failures.

The moment you put real traffic on a phone line, everything gets stress tested at once. Latency stops being a number on a dashboard and starts being a pause the caller notices.

State stops being an internal variable and starts being whether the agent remembers what just happened. From that point on, you are no longer shipping an “AI agent.” You are responsible for the outcome of a phone call.

When teams internalize that, the gap between a demo and a production-ready product becomes obvious.

What a voice AI product really is

Most teams start from a familiar place:

“We already have an LLM and a telephony provider. Let’s wire them together.”

That usually becomes a simple pipeline:

call → streaming audio → STT → LLM → TTS → audio back to the caller

On paper, this looks like a voice AI product. In practice, it often means:

- 600–900 ms of round-trip delay once all hops are included

- Awkward turn-taking that makes callers talk over the agent

- Fragile behavior under interruptions

- Debugging that spans multiple vendors and networks

A serious voice AI product is not “an LLM on a phone number.” It is a system that does 4 things well, every time:

- Hear: capture and stream audio reliably in both directions

- Understand: transcribe and interpret intent in real time

- Decide: maintain context and choose the next action

- Act and speak: take actions in downstream systems and respond naturally

The key insight that I’m trying to get at is simple. The unit of value for voice AI is the end-to-end call loop. Every design decision either protects that loop or degrades it.

The 3 ways voice AI creates real value

If a voice AI product does not clearly do at least one of these, users will not trust it and operators will not rely on it.

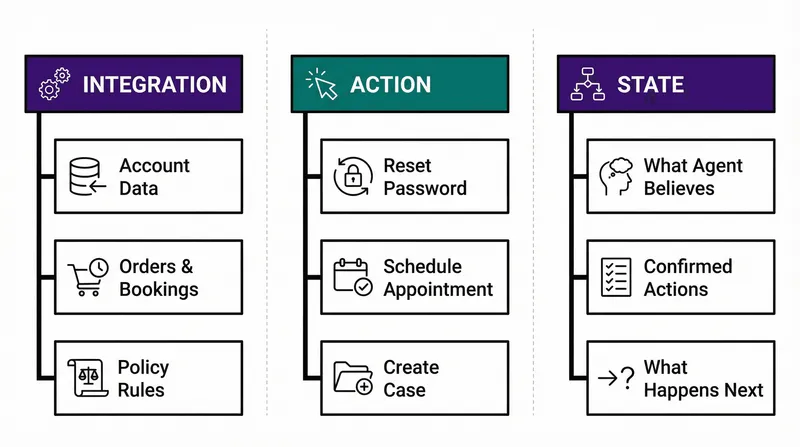

1. Know more than the base model

Great voice AI products are connected to the systems that matter.

That usually means access to:

- account and billing data

- orders, bookings, and shipments

- policy rules and entitlements

- internal knowledge bases and runbooks

- real-time signals like risk or fraud scores

Without this, you are mostly building a more pleasant IVR. The hard part is not calling an API. The hard part is doing it reliably, securely, and fast enough to keep the conversation flowing.

2. Do real work on the caller’s behalf

A voice agent that can only answer questions is not a product but a talking help article.

Real value shows up when the agent can take action:

- reset a password

- reschedule an appointment

- create or update a case

- change a plan or payment method

- route or escalate with context

This requires more than exposing tools. It requires clear schemas, strong guardrails, and operations that are safe to retry and audit. If the agent cannot safely change state in your systems, it will never move meaningful business metrics.

3. Keep state in a way humans can understand

Even on the phone, structure matters.

A strong product makes it clear:

- what the agent believes is happening

- what it has already confirmed

- what actions were taken

- what happens next

If escalation is required, that state should survive the handoff. Otherwise, the intelligence stays buried in transcripts and logs, where it helps no one.

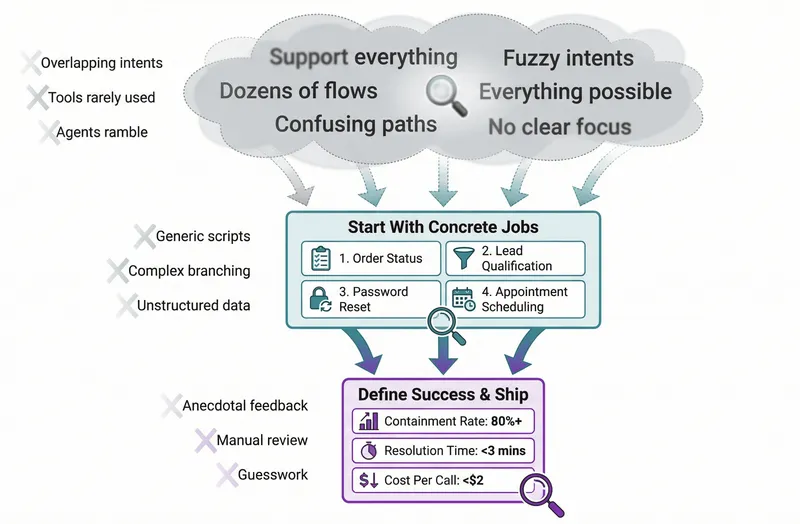

Do not port your whole product to voice

A common failure mode sounds like this:

“We have dozens of flows in our web app. The voice agent should support all of them.”

What usually follows:

- a large, fuzzy agent definition

- overlapping intents

- tools that technically exist but are rarely used correctly

- an agent that hesitates, rambles, or escalates too often

A better approach is to work backward from jobs-to-be-done.

Start with a small number of concrete jobs

Examples:

- deflect simple order status calls

- qualify inbound leads and book meetings

- handle password resets end to end

- schedule appointments under clear constraints

If you cannot measure success for the job, it should not ship.

Identify where the base model breaks

For each job, ask:

- does this require live or private data

- does it change state in a production system

- does it require strict policy or compliance handling

- is latency a hard constraint

Anywhere the answer is yes, you need explicit capabilities, not a better prompt.

Expose a small set of clear operations

Think in terms of concrete actions:

get_order_status(order_id)update_shipping_address(order_id, address)create_case(customer_id, category, summary)transfer_to_human(queue, context_summary)

If you cannot describe your product using a short list like this, the surface area is probably too large.

Design for real calls, not lab conversations

Callers interrupt. They change their minds. They talk over the agent. They call from noisy environments. Networks drop packets.

A production voice AI product treats these as default conditions.

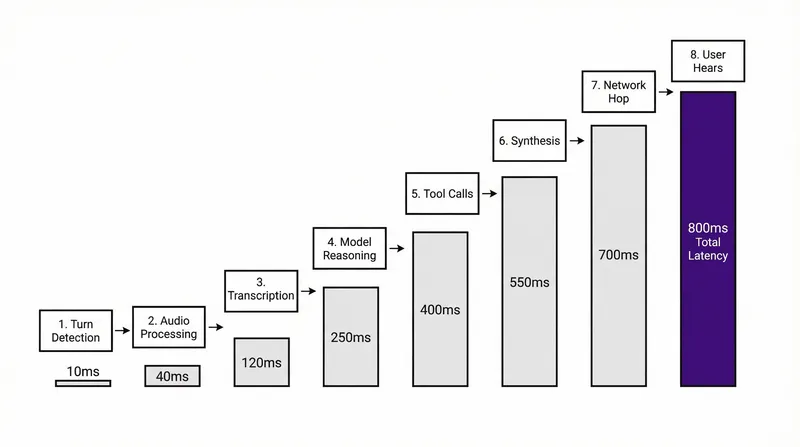

Latency is the environment

People notice delays of a few hundred milliseconds in live conversation. Once pauses approach a second, the interaction starts to feel broken, regardless of how good the content is.

What makes this tricky is that each component often looks fine in isolation. The delay accumulates across the system.

That is why latency has to be treated as a first-class product constraint, not a backend optimization.

Where most latency metrics lie

Many teams underestimate latency because they measure it from the wrong place.

Most platforms report how long things take inside their own infrastructure. Those numbers exclude the final hop back to the user.

Callers experience something different. They experience the pause between when they stop speaking and when they hear the agent begin responding.

That gap includes:

- turn detection

- audio processing

- transcription

- model reasoning

- tool calls

- synthesis

- orchestration overhead

- last-mile network transmission

This is also why recordings almost always look faster than live calls. Recordings are captured on the platform, not at the edge where the user actually is.

To avoid this blind spot, we now surface end-user perceived latency per turn directly in publicly shareable AI widget demos. The measurement is taken on the client. It starts when the user finishes speaking and ends when they hear the agent respond.

Once teams see this number, architectural trade-offs stop being theoretical. Slow turn-taking becomes impossible to ignore.

Barge-in should be the default

If callers cannot interrupt naturally, they will talk over the agent, repeat themselves, and escalate early.

Supporting barge-in requires low and predictable latency, streaming transcription while speech is playing, and session logic that can safely cancel output. This is not something you bolt on later.

Escalation is part of the product

Every real deployment needs a clean handoff path.

Good escalation means:

- recognizing when the agent is stuck

- transferring reliably

- passing a compact summary and structured state

- avoiding restarts and repetition

Bad escalation is treating failure as an edge case instead of a design requirement.

Infrastructure choices quietly define the product

You can prototype voice AI on almost any stack. Production systems expose the trade-offs quickly.

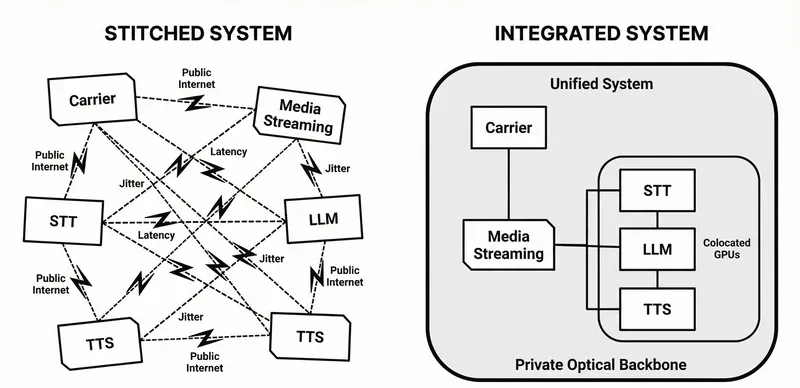

There are two common approaches:

- Stitched: carrier, media streaming, STT, LLM, and TTS from different vendors

- Integrated (Telnyx): voice, media, orchestration, and AI tightly coupled, with optional model choice

Stitched systems maximize flexibility but introduce more hops, more jitter, and more failure modes. Every time audio leaves one vendor to go to another, it traverses the public internet, adding unpredictable latency.

Integrated systems collapse the loop. Telnyx has built this by running the inference engine directly on the carrier network.

Zero-Hop Inference: By keeping the media on a private optical backbone and colocating the GPUs directly with the carrier switch, you shave off the critical milliseconds that generic cloud providers lose to the public web.

Unified State: The system that streams the audio is the same system that runs the model.

If you promise natural conversation, your architecture has to support it.

How to know if your voice AI product is good

Subjective feedback is useful, but it is never sufficient on its own. Voice AI systems feel fine until they are under load, handling real callers with real stakes. You need metrics that reflect how the system behaves in production, not how it sounds in a demo.

So here are 2 variants of metrics to track:

System metrics to track by job

- end-to-end round-trip latency per turn, measured at the client

- barge-in success rate

- transcription error rate on live calls

- escalation rate and where it happens

- abandonment during agent turns

Look at these by job and by intent and not as global averages. A system that performs well on simple calls but collapses on slightly more complex ones will hide that failure if you only look at blended numbers.

Outcome metrics that matter

- containment by intent

- time to resolution versus human-only baselines

- cost per resolved call or per booking

- post-call CSAT or NPS where available

A strong voice AI product does not try to win every call. It does the jobs it claims it can do, does them consistently, and exits quickly when it cannot.

A quick checklist

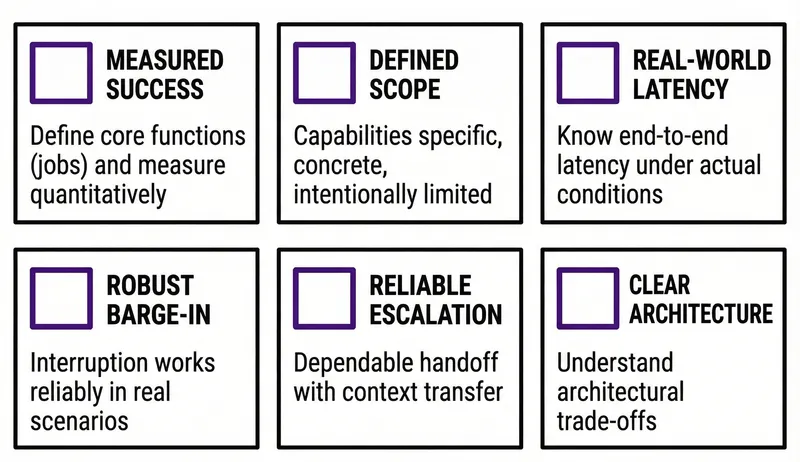

A voice AI product can be considered "ready" when you can confidently affirm the following criteria:

- Measured Success: We have defined a limited set of core functions (jobs) and can quantitatively measure success for each.

- Defined Scope: The product's capabilities are specific, concrete, and intentionally limited.

- Real-World Latency: We accurately know the end-to-end latency under actual operating conditions.

- Robust Barge-in: The ability for a user to interrupt the AI works reliably in real-world scenarios.

- Reliable Escalation: The process for escalating a conversation to a human is dependable and correctly transfers context.

- Clear Architecture: We fully understand the implications and trade-offs of the architectural choices we made.

If you get these right, you are finally shipping a voice AI product that holds up in real world production.

Building Voice AI for real calls? Join our subreddit.

Share on Social

Sign up for emails of our latest articles and news

Related articles