Conversational AI

How to evaluate Voice AI the way real conversations work

Learn the five metrics such as TTFA, E2E, barge-in, and others that explain why your voice AI feels slow on real customer calls.

“It feels slow.”

It’s the deadliest feedback you can get because it’s directionless.

It’s ambiguous, impossible to debug, and a leading indicator that users won’t trust your Voice AI agent to do real work.

You don’t know why it felt slow or where the turn broke. And if you can’t measure it, you can’t fix it.

Voice isn’t like chat. It’s ASR → LLM → TTS running over networks that hate real-time.

A single slow handoff destroys the entire experience.

At Telnyx, the Voice AI companies that succeed all converge on this evaluation framework.

It is simple, practical, and grounded in how real conversations work.

1. Measure the silence users actually feel

The moment a user stops talking, a timer starts in their mind.

Humans expect a response in ~200–300ms. If your agent doesn’t speak for 800–1000ms, the user assumes the bot missed them and repeats themselves.

This repetition shows up in your transcripts as confusion, increases call duration, and tanks containment rates.

In a perfect world, the metric you would measure is:

the moment the user believes they stopped speaking to the moment they hear the agent reply.

This is the true user-perceived latency.

Most teams cannot measure that directly because they cannot instrument the caller’s device. They rely on server-side timestamps, which do not include the first-mile and last-mile transport time the user actually feels.

Once you recognize that server-side timing is a proxy, you can approximate the real experience with two practical timestamps:

- when the system detects end-of-speech and

- when the first audio frame leaves your TTS engine.

The gap between them is Time to First Audio (TTFA). This is what the user experiences. When TTFA drifts above 800ms, interactions break down. When it stays under 600ms, everything feels stable 600 milliseconds, conversations feel stable. When it drifts above 900 milliseconds, interactions start to fail.

But TTFA is only half the story.

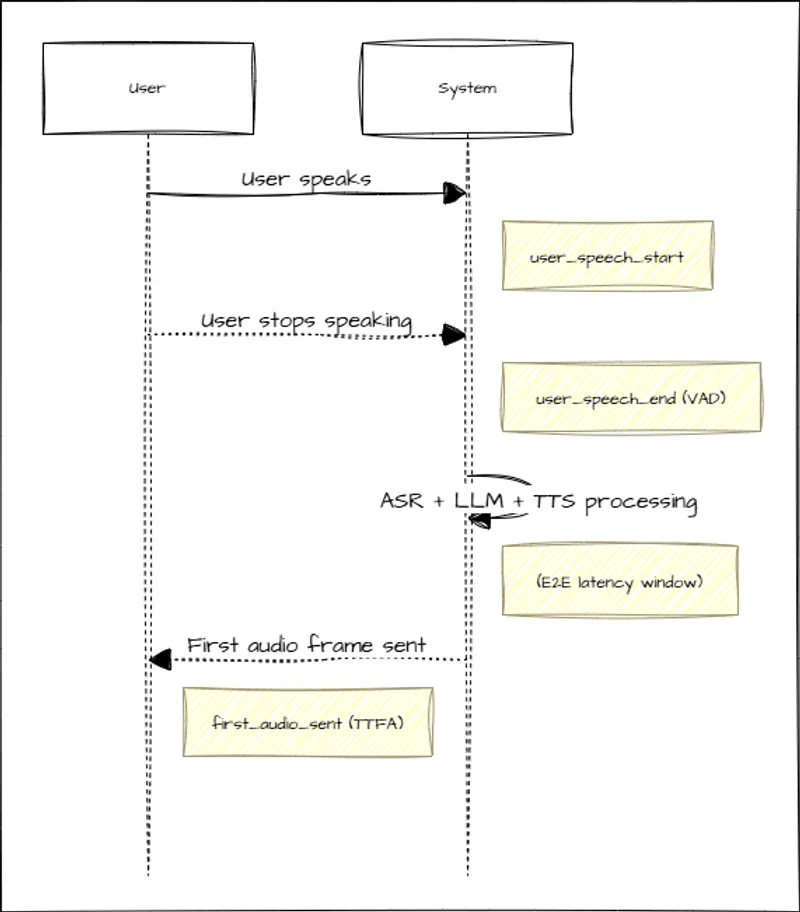

Some teams also measure the full turn duration from user_speech_start to first_audio_sent. This can help you understand how long each turn feels overall, but it is dominated by how long the user speaks.

For debugging performance issues, TTFA and component-level latency are far more useful.

Log these timestamps:

user_speech_start (from VAD)

user_speech_end (from VAD)

first_audio_sent (from TTS)

TTFA tells you what the user perceives.

Turn duration tells you whether a conversation feels heavy.

Founders who track both avoid the classic pitfall: “It feels slow” even though individual components look fine.

2. Find which part of your pipeline is actually slow

When TTFA is too high, one of three components is usually responsible:

ASR is slow Often caused by overly cautious endpointing. You’re waiting too long to confirm silence. This is the silent killer.

The LLM takes too long to produce the first token The relevant metric is not time to first token. It is time to the first usable chunk, usually a short phrase that you can safely send to TTS. If the model takes 800 to 1200 milliseconds to produce that chunk, the call feels unresponsive even if the total generation time is fast.

TTS has a long warm-up TTS warm-up is where many early teams get blindsided. If it takes 300 to 400 milliseconds before any audio returns, your latency budget is already blown. Prompt engineering cannot fix this.

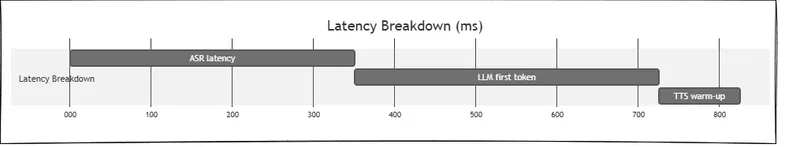

To identify the bottleneck, log:

user_speech_end

transcript_received

first_token_generated

first_audio_sent

Subtract transcript_received - user_speech_end to get ASR latency.

Subtract first_token_generated - transcript_received to get LLM TTFT.

Subtract first_audio_sent - first_token_generated to get TTS latency.

Once you're logging these transitions, the bottleneck becomes obvious.

In production, we've seen:

- ASR contributing 600ms when it should be 350ms (bad endpointing)

- LLM TTFT hitting 1100ms when it should be 400ms (cold start or overloaded inference)

- TTS adding 400ms when it should be 100ms (synchronous synthesis instead of streaming)

Each component has a target. When one component is 2x over target and the others are fine, you know where to focus.

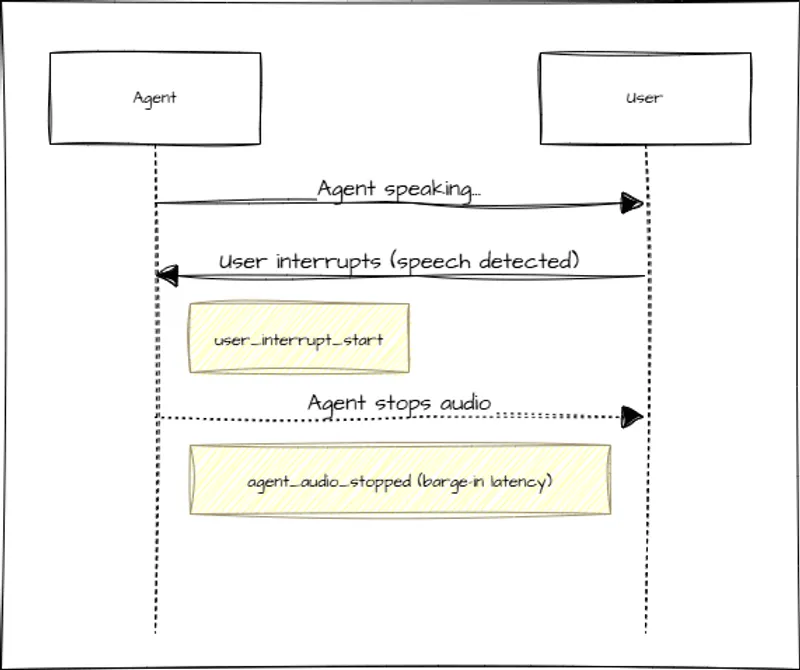

3. Check how your agent behaves when real users interrupt it

Speed isn’t enough. Users talk over the agent, pause mid-thought, and restart sentences.

If your system can’t handle those behaviors, it dies in production.

Two dynamics matter most:

- Interruption handling When a user cuts in, the agent must stop talking, fast. Sub-500ms. Anything slower turns into awkward overlapping speech and users think the bot is “not listening” and it compounds your TTFA problem. Measure it by logging:

user_interrupt_start (new speech detected during agent playback)

- agent_audio_stopped (TTS halted)

- False endpoints If your VAD cuts users off mid-sentence, they get annoyed fast. If it waits too long, you add awkward gaps. Track how often you misjudge user pauses. To measure this, compare detected endpoints to annotated ground truth on a sample of 100-200 calls. Mark where humans actually stopped speaking versus where your system decided they stopped. You want false endpoints under ~5%. Over 10%? You’ll see task completion fall off a cliff even if accuracy is fine. Getting this right matters as much as raw latency.

The 5 metrics you actually need to measure

If you’re early in your Voice AI journey you don’t need a sprawling observability system.

You only need 5 numbers:

user_speech_end- when VAD detects silencefirst_token_generated- when your LLM stream startsfirst_audio_sent- when TTS begins playbackbarge_in_latency- time from user interrupt to agent silencefalse_endpoint_rate- percentage of turns where you cut the user off

Everything else is optional until you scale.

These five measurements will tell you:

- What users experience (TTFA)

- Where your latency comes from (ASR vs LLM vs TTS)

- Whether users talk naturally or adjust to the bot

- Which sessions will churn or escalate (turns with >1000ms TTFA)

- How your changes actually impact retention and cost

Once you are logging these 5 signals, you move from guessing to engineering. “It feels slow” becomes a specific spike, such as ASR waiting too long to endpoint, an LLM taking 900 milliseconds to produce its first token, or a TTS engine warming up for 300 milliseconds before sending audio.

When you understand the shape of that loop, you can tune it into something that feels human on the phone.

Why the edge matters as much as the pipeline

Everything above focuses on ASR, LLM, and TTS, but the caller also experiences two segments that most vendors never measure: first-mile network latency and last-mile network latency. These are the transport delays between the user’s device and the vendor’s media servers, and then back to the user once the agent speaks. They shape real conversational feel more than any internal model metric.

Most voice AI dashboards measure timing only after the audio reaches the vendor’s media servers. They also measure TTS timing only until the audio leaves the server. Neither measurement includes the round-trip travel time to the user.

If your user is in Europe and the vendor’s media and GPU servers sit in a U.S. region, the recorded call will look fast even while the real caller experiences extra 100 to 200 milliseconds on every turn. This is why most voice ai platform evals often look cleaner than what a human hears in real usage.

The recording is captured in the data center, not at the user’s ear.

To get tight turn-taking, the audio needs to reach your ASR quickly, flow through inference quickly, and return to the user quickly. Server-side metrics alone do not capture that round trip.

Telnyx solves this by placing GPU inference right next to our global telephony points of presence.

That removes most of the first-mile and last-mile transport latency because audio no longer crosses the public internet for each hop. This cuts 60 to 100 milliseconds per hop and keeps the full conversational loop consistent instead of unpredictable.

If your agent depends on tight turn-taking where every 100 milliseconds matters, the most meaningful improvement is often not a new model.

It is running compute close to the audio.

Want Voice AI feedback from builders? Join our subreddit.

Share on Social

Related articles