Conversational AI

AI model tradeoffs: Intelligence vs. latency

Balancing speed and intelligence is paramount when picking an AI model for voice applications.

Real-time performance is critical for AI voice assistants. If you ask a question and have to wait several seconds for an answer, the experience no longer feels natural. Even tiny delays can disrupt the conversational flow and break the illusion of a human-like interaction. This is why modern voice AI systems strive to minimize latency (response delay) while maximizing intelligence (the quality and accuracy of responses). Both factors matter—the assistant needs to understand your request and initiate reason (intelligence), and it needs to reply almost instantly (low latency) to feel responsive and natural.

Recent advances highlight this balance. For example, OpenAI reported that their new GPT-4o model cut the average voice response latency from about 5.4 seconds in the original GPT-4 to only ~0.32 seconds under minimal input conditions. That’s nearly human-level response speed, as humans take ~0.21s on average to begin responding.

However, in real-world agentic use cases with complex prompts and API calls, inputs often include long user messages or chained tool use. These result in significantly longer input lengths, often spanning 5k to 10k tokens or more. A token is roughly equivalent to 3-4 characters of English text or about 0.75 words, so a 10k token input might include multiple paragraphs of dialogue, tool output, or memory content. For AI models, processing more tokens means more computation and higher latency. In these scenarios, GPT-4o runs at around 700ms, making it appear slower than the benchmark results, but still fast given the amount of context included.

Achieving low latency without sacrificing too much intelligence is a technical balancing act. TO better understand this trade-off, we’ll compare two leading AI models—GPT-4o and Qwen3-235B-A22B—to see how each balances brainpower and speed. We’ll also clarify the impact of reasoning mode on latency, and explain why some of the smartest models in the world are simply unusable for real-time voice. Finally, we’ll walk through when to prioritize lightning-fast responses versus when you might opt for a slower, smarter brain behind your voice assistant.

GPT-4o

GPT-4o is OpenAI’s flagship multimodal model introduced in May 2024. It boasts top-tier intelligence for non-reasoning models, and it ranks among the best in reasoning and language understanding with an MMLU score of around 80%. It excels at complex reasoning, understanding nuanced instructions, coding assistance, and creative tasks. It can follow multi-turn conversations with high comprehension and even perform real-time translations or analyses using its advanced reasoning abilities.

In real-time scenarios, GPT-4o is significantly more performant than earlier models. While it does not support explicit “reasoning mode” like Qwen3, it is tuned to deliver strong reasoning within latency constraints, making it uniquely suited for real-time voice applications. GPT-4o maintains high quality even with long prompts, API calls, and context windows.

OpenAI also offers several newer or experimental models that push the boundaries of intelligence, but they aren’t yet viable for real-time voice:

- GPT-4.1 is OpenAI’s most capable model for complex reasoning tasks. It outperforms GPT-4o on many benchmarks, but its latency quickly deteriorates with long inputs, running at about 1300ms. This makes it unsuitable for real-time voice applications.

- OpenAI’s o3 mini is a cost-efficient model that performs competitively on reasoning tasks and latency under moderate input lengths. However, GPT-4o remains more responsive and better optimized for real-time use cases, particularly when dealing with longer prompts or multi-turn interactions.

These models are well-suited for asynchronous use cases, but GPT-4o remains OpenAI’s most production-ready model for real-time, latency-sensitive AI voice applications.

Qwen

Qwen3 is a new open-source flagship model from Alibaba Cloud’s Qwen series. It’s a 235-billion-parameter mixture-of-experts (MoE) model that activates only 22B parameters per request. This allows it to deliver impressive performance without always incurring high latency.

Its intelligence rivals top models like GPT-4o, and even surpasses them when its reasoning mode is enabled. In this thinking mode, Qwen3 can perform deep multi-step reasoning, solving complex problems in math, logic, and coding. With reasoning mode on, Qwen3’s MMLU knowledge score is 76.2%, rivaling GPT-4o’s 80%.

However, reasoning mode significantly increases latency, sometimes taking 30+ seconds to generate a final answer to a complex query. This is unacceptable for real-time voice, meaning that Qwen3 would need to run in non-reasoning mode to deliver strong answers with reduced latency. In this scenario, Qwen can deliver responses in roughly 300ms for typical dialogue tasks. When configured for speed, Qwen3 can outpace GPT-4o in responsiveness, albeit with a slight sacrifice in the depth of reasoning per response, but still sufficient for voice use cases.

Intelligence comparison

When comparing AI models, it’s essential to distinguish between reasoning mode and non-reasoning mode.

- Reasoning mode enables deeper multi-step logic but adds significant latency.

- Non-reasoning mode trades off some cognitive depth for the sake of real-time usability.

GPT-4o leads in intelligence for real-time use. Unlike larger models like o1 and o3, which are more intelligent but too slow for voice, 4o is optimized for latency while still delivering high-quality reasoning. Even with longer prompts, it consistently outperforms in benchmark tests and is exceptionally good at understanding nuance, handling multi-turn dialog, and following complex instructions.

Qwen3, especially in reasoning mode, closely rivals GPT-4o in terms of intelligence. It often performs better in code generation, logic, multilingual, and math-heavy tasks. However, it cannot run reasoning mode in real time. For voice, Qwen3 must operate in non-reasoning mode, where it still ranks near the top for open models, making it a strong alternative for those who want quality and control.

Latency comparison

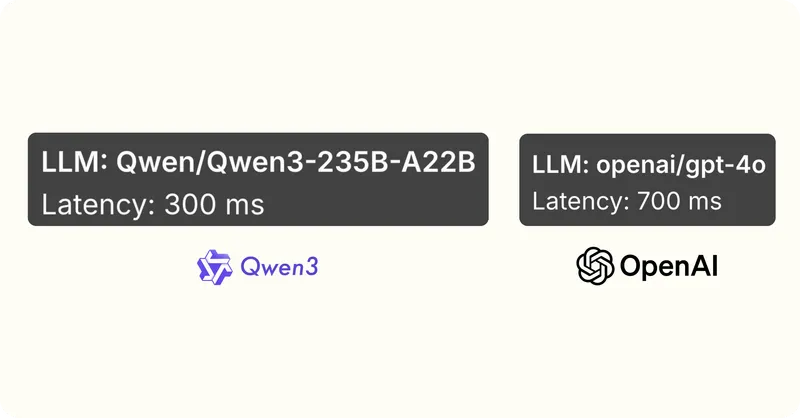

As the most critical aspect of real-time communication, latency stats matter. At Telnyx, we offer these directly within our AI Assistant Builder, available in the Mission Control Portal. Latency can be affected by model architecture, size, hardware, and serving stack. GPT-4o and Qwen3 both benefit for their own reasons:

- GPT-4o benefits from OpenAI’s optimized infrastructure

- Qwen3 benefits from its efficient MoE setup

In terms of latency, the models rank:

- Qwen3, running at 300ms

- GPT-4o running at 700ms

Recommendations: Intelligence vs. latency

Choosing the right mode for a voice assistant depends on your use case requirements. Sometimes speed is king. For a snappy user experience or hardware-constrained environment, you simply can’t afford a 2-second pause. In other cases, intelligence is more important. Users might tolerate a bit of delay if the response quality is significantly better. Getting a correct and detailed answer vs. a quick but wrong answer can make all the difference.

When to prioritize intelligence

If your assistant is handling:

- Complex support, tutoring, or advisory roles,

- Long, multi-turn conversations, or

- Tasks requiring high factual accuracy,

Then intelligence matters more, so you should opt for GPT-4o.

When to prioritize latency

If your assistant is handling:

- Fast voice interactions, such as drive-thrus or smart home commands,

- On-device or embedded use cases, or

- Time-sensitive responses like navigation, control systems,

Then latency is critical. Qwen3 in non-reasoning mode is the best option for real-time voice, although then you sacrifice a bit of intelligence.

Recommendations

As a final takeaway:

- Use GPT-4o for top-tier quality with acceptable latency.

- Use Qwen3 when you want a balance—ultra-low latency in non-reasoning mode.

Matching model tradeoffs to real-world voice AI

Choosing the right model for a voice assistant means balancing intelligence and latency. GPT-4o delivers best-in-class intelligence with solid latency, while Qwen3 gives developers the flexibility to toggle between speed and deep reasoning.

At Telnyx, we’ve designed our AI Assistant Builder to support all of these models, giving you the freedom to match performance with your use case. Whether you're optimizing for low-latency interactions in a drive-thru or deep reasoning in a complex support flow, Telnyx lets you select the right model, deploy it at the edge or in the cloud, and maintain full control over cost and quality.

Match your choice to your goals. For fast, responsive experiences, prioritize latency. For high-stakes conversations, lean into intelligence. And when you need both? Telnyx AI assistants help you build hybrid systems that intelligently balance both in real time.

Contact our team of experts to compare GPT-4o, Qwen3, and other leading models—and find the ideal balance of speed and intelligence for your AI assistant.

Want faster AI responses without tradeoffs? Join our subreddit.

Share on Social

Sign up for emails of our latest articles and news

Related articles