Voice

Best ElevenLabs alternative for voice AI that scales

Looking for an ElevenLabs alternative? Explore five competing options—and why Telnyx is built for voice AI at scale, not just voice synthesis.

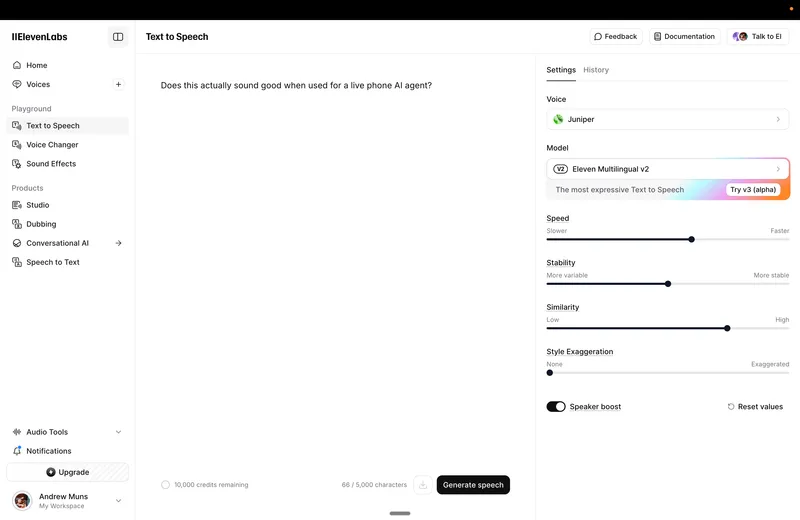

Text-to-speech platforms, such as ElevenLabs, have made it easier than ever to generate lifelike, expressive voices. However, as teams transition beyond content narration and into real-time, voice-driven interactions, they’re discovering that high-quality audio is insufficient to create exceptional agents.

Today’s users expect conversational assistants that feel natural, responsive, and intelligent—especially when those agents operate in real time across voice calls, support lines, or dynamic customer workflows. That means agents need more than realistic voices. They need infrastructure that can handle live audio streaming, interruptions, session control, and low-latency telephony.

A growing set of platforms is emerging to meet those needs, but not all are built to support production-grade voice agents.

In this post, we’ll compare top alternatives to ElevenLabs for real-time agent use cases. You’ll see how different platforms stack up when it comes to streaming, telephony integration, and session control, so you can choose the right foundation for building responsive, scalable voice agents.

Where ElevenLabs agents fall short in production

ElevenLabs gives developers a quick way to create expressive, voice-driven agents. However, when those agents need to operate in real-time—especially across live phone calls or dynamic user sessions—teams encounter technical and architectural roadblocks.

All inference is cloud-hosted on NVIDIA GPU clusters based in the U.S., with no edge presence or regional inference outside North America. That creates latency issues for users in other regions and offers no control over where or how audio is routed.

The ElevenLabs platform offers a streaming API, but it’s optimized for progressive TTS delivery, not true two-way audio or live interaction. There’s no ability to interrupt prompts mid-stream, no access to session state, and no support for overlapping speech. These limitations make it difficult to build agents that feel responsive and adapt on the fly.

There’s also no built-in support for telephony, SIP, or PSTN integration. Developers must connect external CPaaS providers to enable their agents to access the phone network, adding complexity and fragmentation. And because ElevenLabs doesn’t expose lower-level infrastructure, there’s no visibility into call events or media flow, making it harder to debug, optimize, or scale.

Teams experimenting with ElevenLabs agents often find that while the voices sound lifelike, the platform isn’t designed to support real-time, production-ready voice automation.

What production-ready agents need

Many platforms—like ElevenLabs—make it easy to build expressive, voice-driven agents. But when it comes to choosing a provider for real-world deployment, especially over telephony, it’s critical to evaluate what’s beneath the surface. Demo-quality voice is one thing; operating reliably in live, dynamic environments is another.

That starts with true bidirectional streaming. Agents should be able to speak and listen simultaneously, rather than taking turns in rigid, turn-based exchanges. Users expect assistants that respond in real time, not after a pause or delay.

Interrupt handling is essential. In a live setting, users often talk over prompts, change direction, or clarify mid-sentence. Without the ability to interrupt and reroute, conversations often feel scripted and break down under the natural flow of human behavior.

Session orchestration is another core requirement. Developers need access to call state, session metadata, and voice events to manage logic, pass data between systems, and adapt dynamically as context changes.

Infrastructure matters too. Running voice agents over the public internet introduces unpredictable latency and limits control. A platform built for real-time interaction needs edge-level processing, dedicated voice routing, and direct access to SIP and PSTN systems.

These capabilities are what distinguish a prototype from a production-ready agent. Without them, even the most lifelike voices fall short in live environments.

Top ElevenLabs alternatives for real-time voice agents

Most ElevenLabs alternatives focus on generating expressive audio, but building real-time, interactive agents requires more than lifelike speech. As teams move from narrated content to live conversations, they need platforms that support two-way audio, real-time control, and scalable infrastructure.

ElevenLabs

ElevenLabs delivers some of the most expressive and natural-sounding TTS on the market, with fast synthesis and flexible voice cloning. It’s a powerful choice for generating audio output that sounds human. However, ElevenLabs is not a full-stack platform for real-time interaction: there’s no built-in support for bidirectional streaming, telephony integration, or session-level orchestration. Teams must pair it with external tools to handle live conversations.

Twilio + LLMs

Twilio offers global telephony infrastructure and well-documented APIs, making it a common choice for teams already using its communication stack. But Twilio doesn’t provide native tools for agent orchestration or real-time streaming logic. Developers need to integrate their own TTS, STT, and LLMs—and write custom glue code to handle turn-taking and coordination, which can introduce latency and complexity.

Deepgram + ElevenLabs + OpenAI (DIY stack)

This modular approach gives developers control over each component: Deepgram for fast STT, ElevenLabs for expressive TTS, and OpenAI for agent reasoning. It’s flexible, but not turnkey. Without a unifying orchestration layer or native SIP/PSTN support, teams often face high latency, inconsistent timing, and complex state management across services.

AssemblyAI + Resemble.ai

This stack works well for teams experimenting with voice output in lower-stakes or asynchronous contexts. AssemblyAI delivers strong transcription, and Resemble.ai adds customizable TTS. But these tools lack bi-directional audio streaming, barge-in detection, and native telephony integration—key requirements for interactive, real-time agents.

Play.ht / WellSaid Labs

These platforms shine in media and content narration. They produce polished, emotionally expressive audio with minimal setup. However, they’re not built for live interaction. There’s no support for session state, real-time routing, or full-duplex streaming, which limits their use for interactive agent applications.

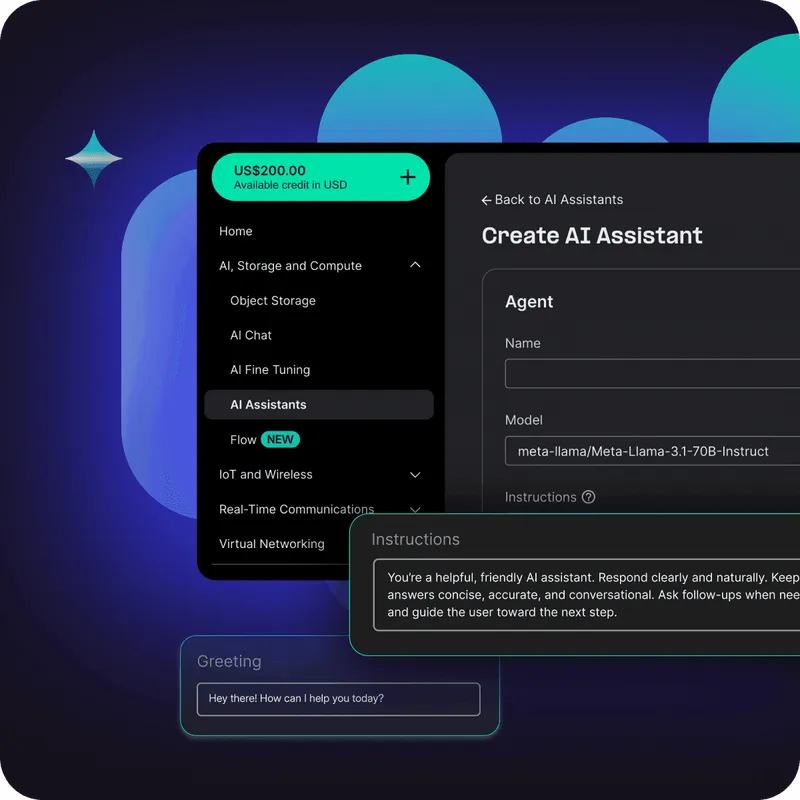

Telnyx

Telnyx is built from the ground up for real-time voice agent deployment. It offers sub-30ms latency, SIP and PSTN integration, bidirectional streaming, barge-in handling, and full session orchestration. This makes it ideal for teams moving beyond prototypes and into live customer-facing scenarios—where performance, reliability, and control matter.

Feature comparison: real-time voice agent platforms

The table below compares top alternatives based on their support for production-grade voice agents, not just text-to-speech capabilities.

| Platform | Best for | Agentic strengths | Limitations |

|---|---|---|---|

| Twilio + LLMs | Teams already using Twilio for telephony | Global voice infrastructure, programmable APIs | No native agent logic or streaming orchestration. Requires external TTS/STT/LLM and glue code. |

| Deepgram + ElevenLabs + OpenAI (DIY stack) | Builders assembling custom agent stacks | High-quality STT and expressive TTS, flexible LLM pairing | No SIP/PSTN or real-time coordination. Latency and complexity without an orchestration layer. |

| AssemblyAI + Resemble.ai | Teams experimenting with async voice agents | Accurate transcription, developer-friendly TTS | No bi-directional streaming or interrupt handling. No native telephony or session state control. |

| Play.ht / WellSaid Labs | Content narration or basic voice assistants | High-fidelity audio output, emotional tone range | Not designed for real-time use. No session awareness, routing control, or live streaming support. |

| Telnyx | Launching scalable, real-time voice agents | SIP/PSTN support, sub-30ms latency, streaming audio, session orchestration, and interrupt handling | Built for production. Less suited for simple narration or lightweight prototypes. |

If you’ve already built with ElevenLabs, there’s no need to start over. You can keep the voices you like and upgrade the rest of your stack for real-time performance.

Keep your voices. Upgrade your stack.

ElevenLabs is a popular choice for creating high-quality, expressive voices. If you’ve already built agents or workflows using their models, you don’t need to start over.

Telnyx lets you keep the voice assets you’ve already invested in while upgrading the rest of your stack for real-time performance. With one-click import support, you can bring your existing ElevenLabs assistant into a programmable platform designed for live interaction. No rebuilds required.

Your agent can continue speaking in the same voice, but now with the ability to interrupt prompts midstream, route calls intelligently, access session state, and stream audio globally in real time. This takes you from content-grade synthesis to infrastructure that supports production-grade automation.

It’s not a full migration. It’s a step forward that gives your team better performance, deeper control, and a more flexible foundation for scaling voice agents in the real world.

Build real-time voice agents that scale with Telnyx

Most teams building with ElevenLabs know what they want—voice agents that sound natural and respond intelligently. However, once those agents need to operate in real-time, especially over the phone, they encounter limitations related to streaming, control, and infrastructure. Without the ability to manage sessions, interrupt prompts, or route calls efficiently, even the most expressive voices fall short in production.

Telnyx gives teams the infrastructure to bridge that gap. It starts with the Telnyx Voice API, which lets you control calls, manage sessions, and orchestrate voice flows with low-latency routing across a private global network. Then you layer in Telnyx Voice AI to power real-time transcription, model logic, and dynamic text-to-speech—all designed for fast, flexible interaction.

And with one-click import support for ElevenLabs assistants, you can keep the voices you’ve already built while upgrading the rest of your stack for live performance. Because Telnyx owns the full stack—from SIP to AI to edge media—your agents run with greater speed, clarity, and control, without the complexity of stitching together separate systems.

Contact our team of experts to explore how Telnyx can elevate your voice interactions to real-time, engaging experiences.

Exploring ElevenLabs alternatives? Join our subreddit.

Share on Social

Sign up for emails of our latest articles and news

Related articles