Zephyr 7B beta

An AI model that combines speed, affordability, and reliability.

Zephyr 7B beta, licensed under MIT, is an impressive large language model with a mid-sized context window. Known for generating human-like responses, it excels in roles such as chatbots and virtual assistants, though it may face challenges with expert-level tasks.

| License | MIT |

|---|---|

| Context window(in thousands) | 32768 |

Use cases for Zephyr 7B beta

- Semantic retrieval applications: With a high MT Bench score and a larger context window, Zephyr 7B Beta is ideal for semantic search, enhancing information retrieval based on meaning.

- Natural language processing: Zephyr 7B Beta's well-rounded performance metrics make it suitable for various NLP tasks, including sentiment analysis, text classification, and language generation.

- Cost-effective AI research: Priced competitively at $0.0002, this model offers AI researchers a balance between budget and model performance.

| Arena Elo | 1053 |

|---|---|

| MMLU | 61.4 |

| MT Bench | 7.34 |

Zephyr 7B Beta demonstrates average performance in AI response quality, translation capability, and knowledge grounding.

1063

1063

1053

1042

1038

The cost per 1,000 tokens for running the model with Telnyx Inference is $0.0002. To illustrate, if an organization were to analyze 1,000,000 customer chats, and each chat consisted of 1,000 tokens, the total cost would be $200.

What's Twitter saying?

- Developer milestone: Jerry Liu underscores the achievement of ensuring reliable performance of 7B models in agent tasks, highlighting the cost-effectiveness and reduced latency benefits. Zephyr 7B Beta emerges as a promising choice for developers. (Source: Jerry Liu)

- Improved chat capabilities: Jeffrey Morgan notes that Zephyr 7B Beta, the second model in the Zephyr series, excels in chat applications and often surpasses larger models like Llama 2 70B. (Source: Jeffrey Morgan)

- model comparison: Yuvi introduces a playground for comparing Zephyr 7B Beta with its predecessor, Zephyr 7B Alpha, allowing users to directly experience both models. (Source: Yuvi)

Explore Our LLM Library

Discover the power and diversity of large language models available with Telnyx. Explore the options below to find the perfect model for your project.

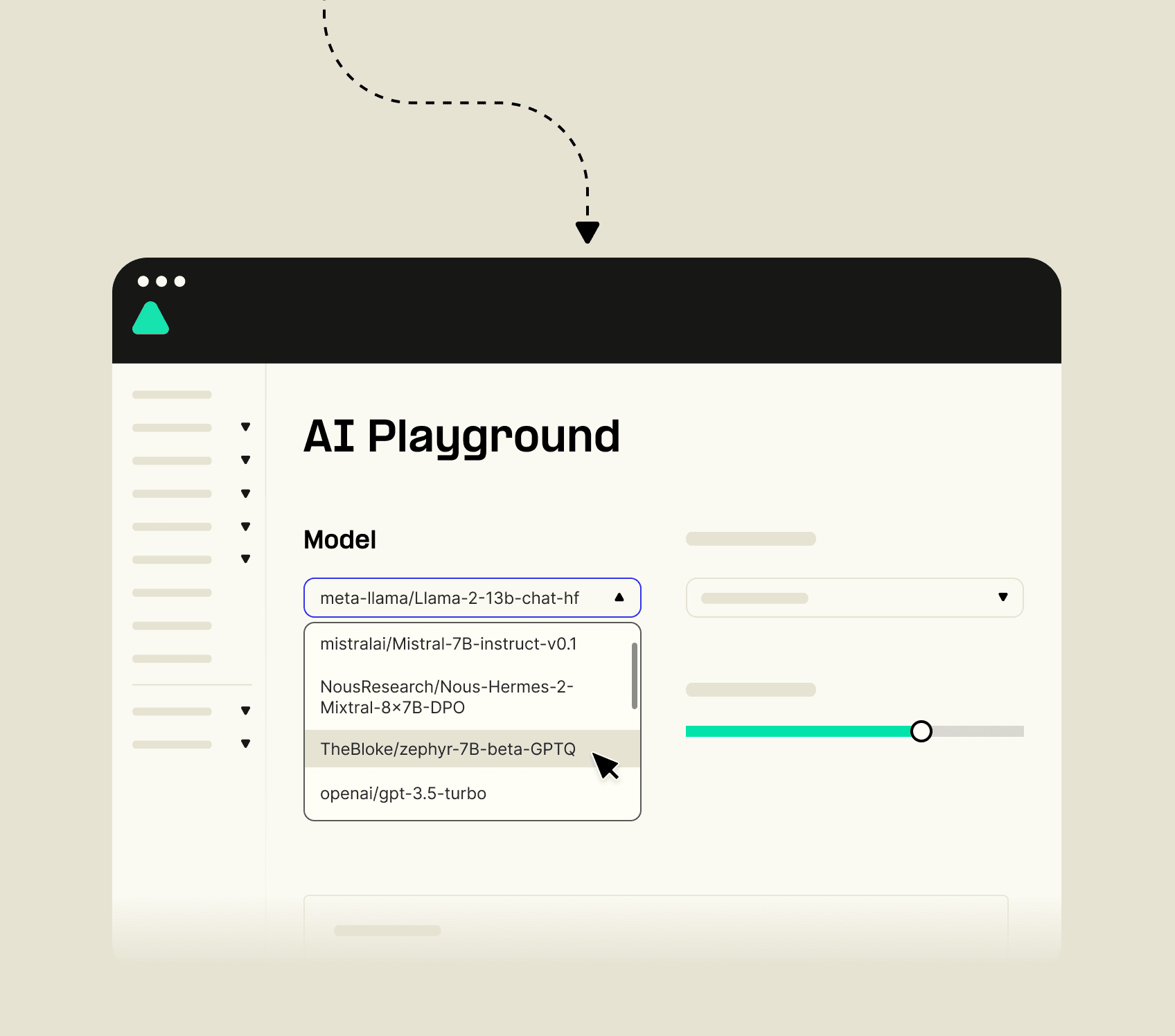

Chat with an LLM

Powered by our own GPU infrastructure, select a large language model, add a prompt, and chat away. For unlimited chats, sign up for a free account on our Mission Control Portal here.

Get started

Check out our helpful tools to help get you started.

What is Zephyr-7B beta?

Zephyr-7B beta is an innovative Large Language Model (LLM) with 7 billion parameters, designed for high performance and efficiency. It is developed by the Hugging Face H4 team and fine-tuned from the Mistral-7B model. Unlike larger models, it can run on consumer hardware, making it accessible for a wide range of applications.

How does Zephyr-7B beta differ from other LLMs and GPT models?

Zephyr-7B beta distinguishes itself by its size, efficiency, and training methods. It is smaller and can be run on a laptop, unlike larger models that need more computational resources. It does not use human feedback reinforcement learning or response filtering, maintaining an authentic response style. Additionally, it is open-source, providing transparency and allowing free use and customization.

Can Zephyr-7B beta be run on consumer hardware?

Yes, one of the key strengths of Zephyr-7B beta is its efficiency, which allows it to be run on consumer hardware, including laptops. This makes it more accessible and practical for a wide range of applications compared to larger models that require significant computational resources.

What are the training methods used for Zephyr-7B beta?

Zephyr-7B beta was trained using Direct Preference Optimization (DPO) on a mix of publicly available synthetic datasets. Unlike some other models, it does not use methods like human feedback reinforcement learning or response filtering, allowing for a more authentic response style.

Is Zephyr-7B beta open-source?

Yes, Zephyr-7B beta is open-source. This transparency allows for free use, customization, and community engagement, distinguishing it from proprietary models like ChatGPT.

What tasks does Zephyr-7B beta excel in?

Zephyr-7B beta demonstrates impressive performance in tasks such as writing, roleplay, translating, and summarizing texts. However, it may not be as strong in writing programming code or solving math problems.

Where can I use Zephyr-7B beta?

Zephyr-7B beta can be used in a wide range of applications, including but not limited to, natural language processing tasks, chatbots, and content generation. It is also accessible for integration into connectivity apps through platforms like Telnyx. For more information on integrating Zephyr-7B beta into connectivity apps, visit Telnyx.

How does Zephyr-7B beta ensure the quality of its responses?

While Zephyr-7B beta generates responses based on learned data, it has demonstrated accuracy close to GPT-4 in writing and roleplay tasks by being fine-tuned on a variety of synthetic datasets. However, as with any LLM, there may be instances where responses contain inappropriate content, highlighting the importance of ongoing monitoring and moderation.

Can I contribute to the development of Zephyr-7B beta?

Yes, as an open-source project, Zephyr-7B beta welcomes contributions from the community. Whether it's through developing new features, providing feedback, or improving existing functionalities, community involvement plays a crucial role in its development. For contributing, check out the Zephyr-7B beta repository on GitHub.