Voice

Best Vapi alternative for voice AI that scales

Looking for a Vapi alternative? Telnyx delivers a lower-latency, more scalable Voice AI platform built for developers and product teams.

With the voice AI agent market projected to reach $47.5 billion by 2034, developers are racing to build AI-powered voice applications that are fast, natural, and production-ready. Open-source frameworks like Vapi promise a quick onramp, letting teams stitch together SIP trunking, transcription, and language models into a basic voice-based AI assistant.

But what works for a prototype often breaks down in production. Latency creeps in. Responses lag. Call quality degrades. And scaling becomes a headache just as demand ramps up, especially in high-stakes environments like support centers, logistics operations, and dispatch systems.

To meet these rising expectations, teams require more than glue code and APIs. They need purpose-built infrastructure, including low-latency media handling, real-time speech pipelines, and end-to-end control over voice, compute, and AI.

This post examines the disconnect between quick-start voice AI toolkits and truly production-ready infrastructure. We’ll walk through what to look for in a real-time voice platform, explore the top Vapi alternatives, and explain how teams can transition from prototype to production without compromising on quality, latency, or control.

Why developers outgrow Vapi

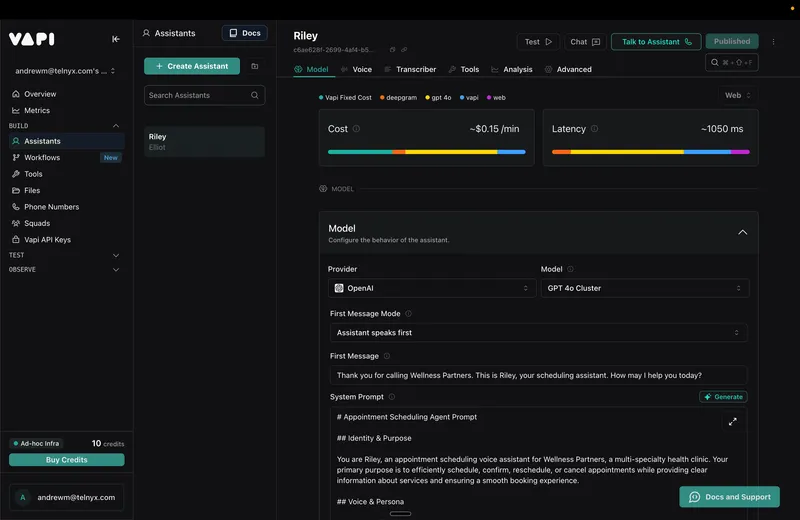

Vapi was created with flexibility in mind, which is appealing for developers still figuring out how different components fit together. However, as developers aim to move from prototyping to real-world applications, this flexibility can become a burden.

Relying on the public internet for audio transmission introduces unpredictable latency, and third-party dependencies create additional points of failure. As demand for voice applications grows, particularly in dynamic fields such as healthcare and logistics, these issues become exacerbated, underscoring the need for a more robust and integrated solution.

In production environments, flexibility comes at a cost. Audio travels over the public internet, making latency unpredictable. If your speech recognition depends on a third-party engine, you introduce another hop and an additional point of failure. Interrupts often don’t work as expected, especially at higher concurrency. Without direct visibility into the infrastructure, you're left guessing when things will break.

These challenges grow with scale. A live voice assistant that works in testing might fail under real-world load, dropping audio, missing utterances, or pausing awkwardly between turns. For teams building IVRs, customer support agents, or voice-driven dispatch tools, these problems are not edge cases. They’re blockers to going live.

What matters in a voice AI platform

Low latency is non-negotiable. If your assistant can’t respond in near real-time, it breaks the illusion of natural conversation. Sub-second delays are noticeable, and anything beyond that leads to user frustration and abandonment.

Beyond speed, the platform needs to support real-time audio streaming, barge-in handling, and flexible session control. Human conversations aren’t turn-based. Voice AI systems need to adapt on the fly, update mid-utterance, and recover gracefully when users interrupt.

Observability matters too. When something goes wrong in a call, you shouldn’t need to piece together logs from a SIP provider, a transcription engine, and an LLM integration. A production-grade platform provides visibility into every stage of the session—voice ingress, audio events, and LLM responses—enabling you to debug quickly and operate with confidence.

Top alternatives to Vapi

Several platforms offer strong alternatives to Vapi, each bringing different strengths and tradeoffs to the table. In this section, we’ll compare how they perform when it comes to building production-ready voice AI systems.

Voiceflow

Voiceflow is a popular tool for teams that want to visually prototype conversational flows. Its drag-and-drop interface makes it easy to design and test voice assistants without writing code. But it’s primarily built for prototyping—not production—so it lacks real-time audio control, infrastructure reliability, and flexibility with language models.

Synthflow

Synthflow offers a fast, no-code path to launching voice bots. It’s great for quickly validating ideas or spinning up small-scale assistants. However, because you don’t manage the SIP layer or transcription engine, you’re limited in how much you can customize or scale. Latency and platform lock-in can become issues as your use case matures.

Rasa + SIP stack

Some teams assemble their own stack using open-source frameworks, such as Rasa for conversational logic and providers like Twilio for SIP and media. This setup provides control and flexibility, but it also introduces significant operational complexity. You’ll need to integrate and maintain every component, from transcription to audio routing to observability. This often leads to brittle integrations, limited vendor support, higher total costs, and a constant burden of maintaining compatibility across fast-moving tools.

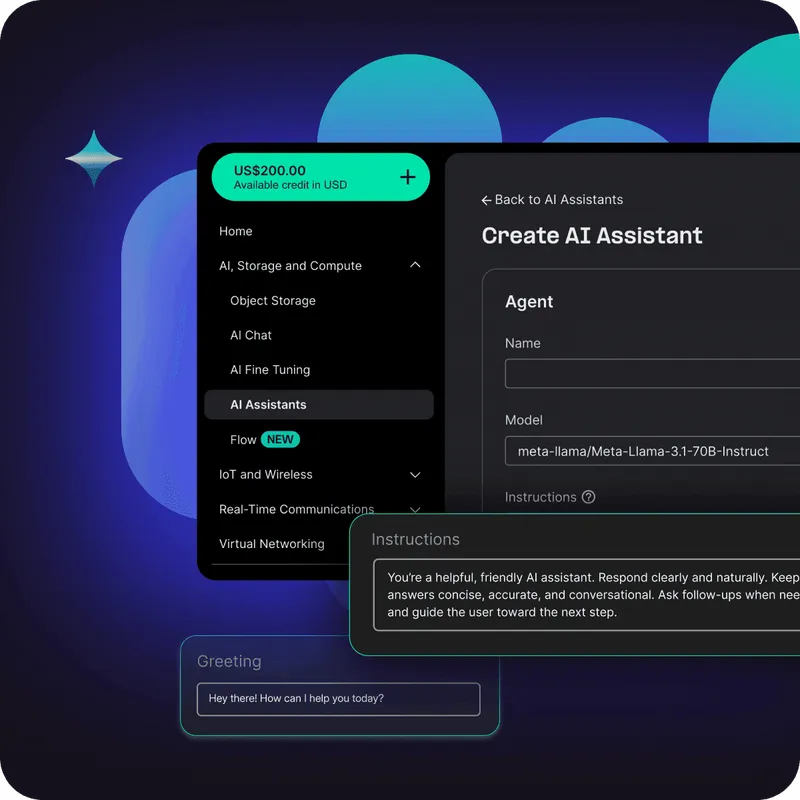

Telnyx

Telnyx takes an integrated approach, combining global voice infrastructure, real-time media handling, and Voice AI capabilities into a single platform. It eliminates the need to stitch together third-party services, making it easier to build scalable, low-latency applications out of the box. Unlike most alternatives, including Vapi, Telnyx gives teams full control over every layer of the voice stack, enabling faster response times, higher reliability, and greater flexibility in real-world deployments.

Vapi alternatives comparison

Here’s how top Vapi alternatives compare when it comes to building scalable, real-time voice AI applications.

| Feature | Vapi | Twilio + Rasa | Voiceflow | Synthflow | Telnyx | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Call control | Basic (via API) | Custom-built | Visual only; no SIP access | No access to SIP/media | Full native control | |||||

| Real-time transcription | Requires integration | Third-party dependent | Not supported | Abstracted; not customizable | In-house, sub-30ms streaming | |||||

| Audio latency | Variable (public internet) | Varies with setup | N/A – playback only | Unpredictable at scale | Sub-30ms roundtrip | |||||

| Media + SIP integration | BYO / separate | DIY via Twilio & peers | Not supported | Fully abstracted | Fully integrated | |||||

| Observability | Limited | Fragmented / DIY | Minimal | Basic | Built-in, end-to-end | |||||

| LLM flexibility | Some model options | Fully customizable | Limited model control | Predefined flows only | Bring-your-own model, orchestration ready | |||||

| Scalability | Prototype friendly | Manual effort | Designed for prototyping | Suitable for small pilots | Built for production-scale workloads | |||||

| Ease of use | Moderate | High complexity | Easy (no-code) | Easy (no-code) | Mid-level (developer-first) |

While many tools help you get started, few are designed to scale. The further you move toward production, the more important it becomes to have full control over latency, media, and infrastructure.

Why Telnyx is the best Vapi alternative

Vapi helps teams prototype quickly, but delivering real-time, production-grade voice assistants requires deeper control over performance, infrastructure, and call logic. Telnyx was built for this shift to scalable, enterprise-grade voice AI. With sub-30ms round-trip response times powered by in-house speech recognition and a private global voice network, Telnyx ensures consistently low latency and high reliability, even at scale.

The platform combines dedicated GPU infrastructure, interruptible audio, real-time transcription, and context streaming in a single system. Developers can bring their own LLMs or use Telnyx's built-in assistants, all while building with hosted tools, REST APIs, and CLI support. No more stitching together SIP, STT, and media layers—Telnyx unifies everything under one roof, giving you full programmability, observability, and flexibility from day one.

What sets Telnyx apart for Voice AI at scale

While most tools focus on voice generation or simple APIs, Telnyx delivers an end-to-end platform for real-time, production-grade Voice AI. Here's what sets Telnyx apart and why it matters in real-world deployments.

| Core capability | Why it matters | Real-world impact |

|---|---|---|

| Dedicated GPUs | Ensures consistent performance and fast inference times | Your voice agent responds instantly, even under load |

| Private IP network | Reduces jitter, packet loss, and public internet latency | Crystal-clear audio and reliable call quality at scale |

| Programmable media engine | Enables you to control streaming, barge-in, and silence detection | Build interruptible, natural-feeling conversations |

| Built-in speech-to-text | Eliminates the need for third-party transcription integration | Lower latency and simplified architecture |

| SIP + telephony stack | Native call control and routing | Deploy in production without managing carrier infrastructure |

| Hosted voice builder | Rapid prototyping with no setup | Teams can test and iterate quickly without engineering bottlenecks |

These capabilities are the difference between a system that sounds good in a demo and one that performs reliably under pressure. Telnyx brings all of these together into a single, developer-friendly platform.

Elevate your voice AI with Telnyx

Vapi still rides on Twilio, and users are already complaining about garbled, jitter ridden audio.

Ian Reither, COO @ Telnyx

As you navigate the potential complexities of scaling voice AI from prototype to production, the right infrastructure becomes indispensable. Telnyx stands out with an integrated platform that seamlessly combines voice, media, and AI capabilities. By managing the global voice network, Telnyx ensures low latency and reliable performance, giving you the tools to build responsive, real-time voice assistants without the hassle of managing multiple vendors.

Telnyx offers unparalleled control and reliability, enabling you to create custom solutions tailored to your unique needs. With real-time audio streaming, intuitive API access, and comprehensive observability, Telnyx provides the foundation for building scalable voice solutions that adapt and grow with your business. Whether you're upgrading from Vapi or starting fresh, Telnyx's robust infrastructure and support make it the go-to choice for developers aiming to deliver exceptional voice experiences.

Talk to our experts to learn how Telnyx can bring your voice AI projects to production-ready status.

Have Vapi alternative questions? Join our subreddit.

Share on Social

Sign up for emails of our latest articles and news

Related articles